This is a copy-paste of a slack discussion which we can now continue here.

I was watching this

And thought it might make a more interesting starting point for AI than a Finite State Machine. If we made a simple neural network for each species which had inputs (compound concentration in the environment and in the microbe, certain points on the cell wall that could sense by touching) and outputs (whether to move or release agents) then we could have an AI that evolves with the species it is in.

So I guess how it would work is every time a member of species A spawns while the player is playing it gets a variant of the current generation of neural network for that species. It’s fitness then gets rated (did it die, did it gain resources, etc) and once that generation of variants has been run through the best get selected and mutated for the next generation.

If we could get it to work well then it would make for an interesting play experience for the player as the other microbes would learn to act more cleverly around you can fight you better over time.

It would also be a good foundation for similar systems when brains are available.

There are issues. Like in the Mario video the level is the same every time but in the microbe stage the spawn conditions are different every time so that might throw off the learning. Also is it al all reasonable to give a microbe a neural network? Presumably when a real microbe “decides” it’s a chemical network of some kind.

We could build a petri-dish to evolve the ai in where the conditions are the same every time to give it a head start if we needed that.

What do you guys think? Interesting or a waste of time?

I guess in terms of workload making a finite state machine means we have to think a lot about the situations the microbes get in and if we change the game (add new biomes or new compounds) then the ai will need to be changed. If we make something dynamic like this it will work in all conditions.

Cool idea! Given that intelligence is a major evolutionary trait anyway, it’d give the player more of a challenge as well as representing another level of fitness beyond just body functions. My only worries are that such a system would have to tie in with population dynamics which would involve evaluating the comparative successes of behavioural algorithms between species, and that AI would have to be somewhat advanced to start off with and balancing with generation time might prove difficult. The latter could partially be solved by either your petri dish idea, or just giving the AI a simple base to start with (including basic commands like swim away from predators and swim towards compounds) and letting it develop in-game from there.

Player interaction could also play a part, since they’ll be creating their species’ own behavioural system themselves, using similar inputs and outputs with generation-based iterations. There was actually a discussion about this recently on the fan forums of all places which brought up some interesting points: http://thrivegame.freeforums.net/thread/12/multicellular-behavior-editor-concept-discussion

We’ve actually discussed this before, in this thread, i think: http://thrivegame.canadaboard.net/t1011-crash-course-into-ai

That’s definitely not to say we shouldn’t discuss it more now - that was a long time ago, long before we had a game which needed AI, and the exact form of AI proposed was a little different. I’m also not going to suggest everyone reads it, as we’re likely to come up with fresher ideas if we start from scratch, but i thought I’d link it for reference. On a side note, that thread was the reason I was asking after Daniferrito a while back, as I kind of wanted to reopen the AI discussion, prompted by the fan forum thread oliver linked.

That aside - for some of the reasons mentioned by tjwhale, and many besides, I’ve always wanted to avoid using a finite state machine, in any way possible. They’re too rigid (especially when we don’t know what most of the creatures in our game are even going to look like, let alone do), rely too much on the imagination of the programmer (i.e.: they’re effectively hard-coded behaviour, something every programmer knows is a bad idea), and are very hard to make interesting.

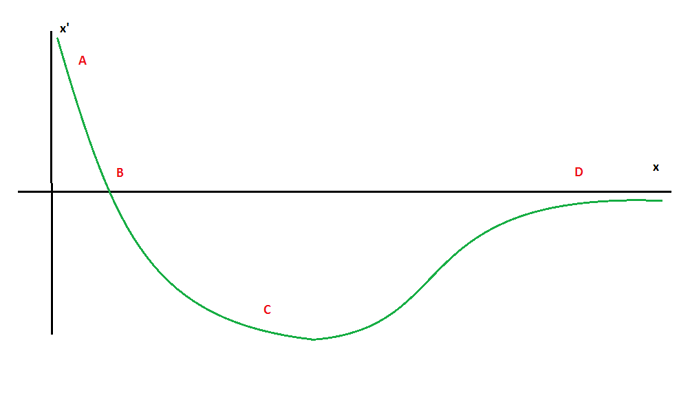

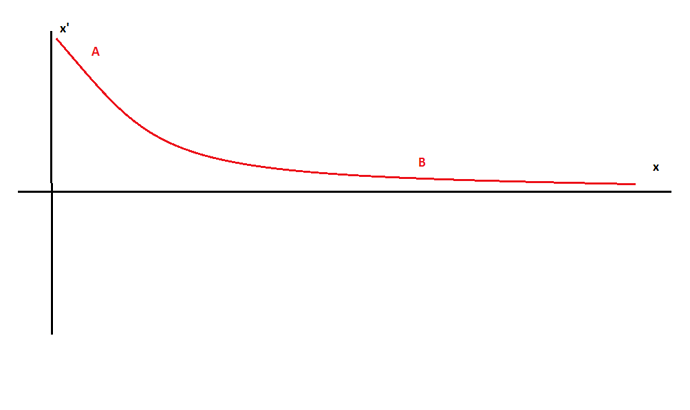

The question then, is what’s the alternative? The obvious answer is that it needs to be adaptable, and ideally somewhat evolvable, which gives plenty of options. Neural networks are a fairly simple option, and I think what Dani was describing in his thread was a more direct alternative, where rather than evolving a neural structure which responds to simple stimuli, you evolve a function which reacts to multiple stimuli. The end result is similair (one or the other is probably better suited to our application, though I’m not sure which, and I’ll leave that question for another time) - a system which attempts to survive/do well given a situation/stimulus, is selectively bred based on it’s performance, and eventually develops an optimal response to that stimulus.

The problem (if there is one) is the amount of stimulus needed to evolve a reasonable AI. As mentioned in the video, it took 24 hours to create the solution seen about, and in my own experiments it can take 5-6 hours of solid simulation to get solutions to even much simpler challenges. We don’t have time for that in game, and even if we did, the population of each creature active at any one time will be tiny compared to the numbers required for effective evolution (my 5-6 hours experiment involved a population of around 500, with generations lasting no more than a minute). On top of that comes the sheer complexity of the game, with many potential stimuli both outside (sight/smell/harm/compound gradients/temperature/light…) and inside the cell (compound levels, existing damage, toxin effects), and potential responses. Finally we have the fact that the whole situation isn’t stable, with multiple completely different scenarios possible over the course of one lifetime (scavenging for food when young and weak, avoiding predation, attacking weaker cells, scavenging for rarer nutrients, avoiding hostile environments, etc, etc.), let alone the fact all of this could change drastically both when the species itself evolves, and every other species around it.

In short, no, we can’t just use a neural network, it just wouldn’t produce good, or interesting, results. But rather than this being the end of the discussion, I think it’s the start. We would like to use an adaptable system, but what? Does one exist we could just use, or can we adapt an existing one to work in our situation (which is relatively unique in terms of it’s constraints - i.e.: we want relatively real-time results). This is a discussion I’ve been wanting to have for years, but it was never the right time to start it.

I’d suggest we start by propping up what the AI can do, as oliver suggests above, we give it basic behaviours to start with (“swim away from hostiles”, “swim towards food”), but we need to go beyond this. I’m not sure how exactly, but now might be a good time to start thinking about it. Can we modularise behaviour? i.e. rather than a neural net where a neuron fires only if it recieves above a set stimulus, can we have more complicated behaviour for each neuron? I have some further ideas, but I’d like to hear which direction you guys think we should go first?

A lot of the above concerns also apply to the behaviour editor, if its too complicated to evolve a solution to AI behaviour, it’ll be too difficult/tedious for most players to do the same for their species.