The AI of the microbe stage is already reaching a state where it is fun to play with and against, and it makes for quite the challenge in some instances whether you be predator or prey. However, there has been little to no talk as far as I have seen on catching current AI up with implemented features (Pilus, Colonies, New Organelles, Sessility, ETC.) nor any talk on how to future proof for future stages.

I come bearing a possible solution. Utility-based AI and modular behaviors.

For those who don’t know and don’t want to sit through a 3-hour programming lecture, I will boil both of these down. Basically, Utility-based AI is AI that has a set of tasks and calculates the usefulness (or Utility) of each task using an algorithm specific to each task.

Here is a strong Youtube video on the subject:

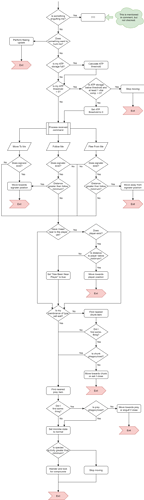

Now, you would be right to point out this is similar to the system that exists currently. The main difference is that each behavior that an AI microbe can perform is mixed in with the calculation, and there is a clear order/priority to the behaviors that microbes undertake (eg. they will ALWAYS try and search for chunks before tumbling, and will ALWAYS try to search for prey before either). With Utility AI, this would be broken up into three phases: Analysis, Decision, and Action.

Analysis

This stage would occur at the beginning of an AI’s “thought process”, so to speak. The AI would intake every needed piece of data from the environment, and calculate Utility Values going forward. An example of this would be as follows:

The utility of the action “Gather Glucose from Cloud” would be calculated by analyzing whether Glucose is the primary food source of the AI, the current Glucose levels, how much it needs to keep operating for an arbitrary amount of time, the closest glucose cloud size, and whether the AI would gain more from consuming a prey item. Of course, this is an arbitrarily constructed example, and would likely be different in the final implementation.

After the utility of each task is calculated, it moves into its decision phase.

Decision

This stage forms the middle of the AI’s thought process, and consists of simple comparisons and priority management.

Due to the nature of balancing many needs and actions in a game such as Thrive, simply having an AI flatly compare each task would not only be relatively resource intensive but would create plenty of “dumb” behaviors. I suggest we use the Bucket System used in The Sims. The Sims, another utility-based game, separates each utility calculation into groups known as Buckets. These Buckets are then processed with an additional weight on each, allowing AI to avoid dumb behaviors and keep self-sustaining. An example of this would be processing the Food need at a higher level than the Entertainment need.

After it finds the action of the highest utility, it then moves into the action phase.

Action

This stage forms the end of the process, and the part the player actually sees. Once a cell finds an action of the highest utility, it will proceed to execute this task until it finds another task of higher utility.

You might be rightly worried about microbes bouncing between tasks rapidly if they have incredibly similar Utility Values. One solution, proposed by this paper, posits that giving each task inertia would be a good way to solve this. I wholeheartedly agree with this! If you give extra weight to a task already being performed, it can make an AI want to do that task until a truly better option comes along.

Modular Behaviors

Now, onto the Modular Behaviors part. There is something to be said about the potential size of this system. Separating all potential actions into tasks then assigning a value to them can sound extraordinarily big, especially if we account for every possible part a microbe can have.

I suggest we, in this case, only attach specific tasks to the AI if it is physically possible with the AI’s current biology. For example, we can attach all relevant Pilus code to the AI when it is spawned if it actually has a pilus, whereas microbes without a pilus don’t even possess the AI to use one.

There is a similar, albeit very different concept in UE4 Behavior Trees, where each task is a self-contained piece of code that is loaded into a behavior tree, and can be changed separately from the tree itself.

The idea behind this is to hopefully make both microbe and multicellular/aware AI light and easily expandable. After all, an exclusively aquatic creature doesn’t need the AI necessary to create anthills!

The Utility Sysem combined with a Modular Task system should allow us to continuously add behaviors related to new features while creating a system that allows for emergent behavior! The distinct phases of the AI's system should allow for easier debugging and modification of the system.

That’s pretty much the long and short of my concept behind a potential AI cleanup/overhaul. I would love to hear from other programmers and designers on this. If you can’t tell, this was one of the primary reasons for me joining the project, and AI is something that could use cleaning on this project!